爱可可AI论文推介(10月13日)

LG - 机器学习 CV - 计算机视觉 CL - 计算与语言

1、 [CV]*GRF: Learning a General Radiance Field for 3D Scene Representation and Rendering

A Trevithick, B Yang

[Williams College & University of Oxford]

仅通过2D观察在单一网络中表示和渲染任意复杂3D场景的隐式神经函数(GRF) , 利用多视图几何原理 , 从观察到的二维视图获得内在表示 , 将二维像素特征精确映射到三维空间 , 保证学习到的隐式表示有意义 , 并在多视图间保持一致 , 利用注意力机制 , 隐式解决视觉遮挡问题 。 利用GRF , 可合成真实的二维新视图 。

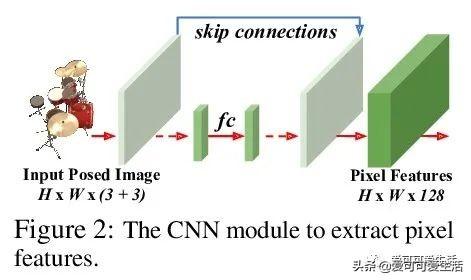

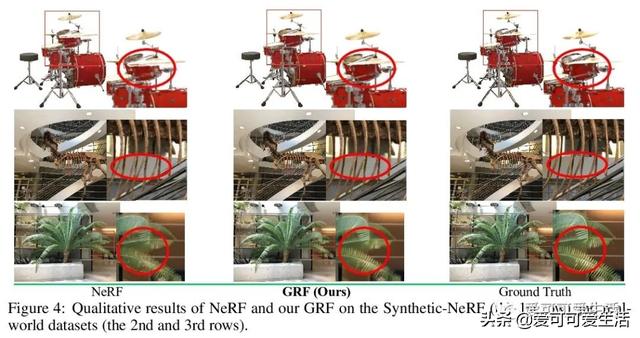

We present a simple yet powerful implicit neural function that can represent and render arbitrarily complex 3D scenes in a single network only from 2D observations. The function models 3D scenes as a general radiance field, which takes a set of posed 2D images as input, constructs an internal representation for each 3D point of the scene, and renders the corresponding appearance and geometry of any 3D point viewing from an arbitrary angle. The key to our approach is to explicitly integrate the principle of multi-view geometry to obtain the internal representations from observed 2D views, guaranteeing the learned implicit representations meaningful and multi-view consistent. In addition, we introduce an effective neural module to learn general features for each pixel in 2D images, allowing the constructed internal 3D representations to be remarkably general as well. Extensive experiments demonstrate the superiority of our approach.

文章插图

文章插图

文章插图

文章插图

文章插图

文章插图

2、[LG]*No MCMC for me: Amortized sampling for fast and stable training of energy-based models

W Grathwohl, J Kelly, M Hashemi, M Norouzi, K Swersky, D Duvenaud

[Google Research & University of Toronto]

大规模训练基于能量模型(EBM)的简单方法(VERA) , 用熵正则化发生器摊平用于EBM训练的MCMC采样 , 采用快速变分近似改进基于MCMC的熵正则化方法 。 将估计器应用到联合能量模型(JEM)中 , 在性能保持不变的情况下训练速度大大加快 。

Energy-Based Models (EBMs) present a flexible and appealing way to representuncertainty. Despite recent advances, training EBMs on high-dimensional dataremains a challenging problem as the state-of-the-art approaches are costly, unstable, and require considerable tuning and domain expertise to apply successfully. In this work we present a simple method for training EBMs at scale which uses an entropy-regularized generator to amortize the MCMC sampling typically usedin EBM training. We improve upon prior MCMC-based entropy regularization methods with a fast variational approximation. We demonstrate the effectiveness of our approach by using it to train tractable likelihood models. Next, we apply our estimator to the recently proposed Joint Energy Model (JEM), where we matchthe original performance with faster and stable training. This allows us to extend JEM models to semi-supervised classification on tabular data from a variety of continuous domains.

推荐阅读

- 谷歌:想发AI论文?请保证写的是正能量

- 谷歌对内部论文进行“敏感问题”审查!讲坏话的不许发

- 2019年度中国高质量国际科技论文数排名世界第二

- 谷歌通过启动敏感话题审查来加强对旗下科学家论文的控制

- Arxiv网络科学论文摘要11篇(2020-10-12)

- 聚焦城市治理新方向,5G+智慧城市推介会在长举行

- 中国移动5G新型智慧城市全国推介会在长沙举行

- 年年都考的数字鸿沟有了新进展?彭波老师的论文给出了解答!

- 打开深度学习黑箱,牛津大学博士小姐姐分享134页毕业论文

- 爱可可AI论文推介(10月9日)