爱可可AI论文推介(10月15日)( 三 )

文章插图

文章插图

文章插图

文章插图

5、[IR]Pretrained Transformers for Text Ranking: BERT and Beyond

J Lin, R Nogueira, A Yates

[University of Waterloo & Max Planck Institute for Informatics]

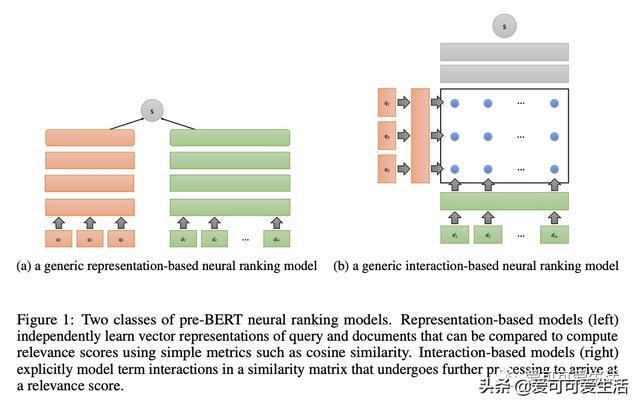

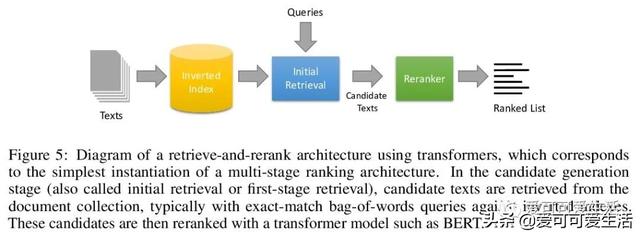

面向文本排序的预训练Transformer模型综述 , 提供了关于文本排序与Transformer神经网络结构的综述 , 其中BERT是最著名的例子 。 综述涵盖了广泛的现代技术 , 包括两大类:在多阶段排序架构中执行重排序的Transformer模型 , 以及试图直接执行排序的密集表示学习 。 重点考察长文档处理技术(超出NLP中使用的典型逐句处理方法) , 以及在处理效果(结果质量)和效率(查询延迟)之间权衡的技术 。

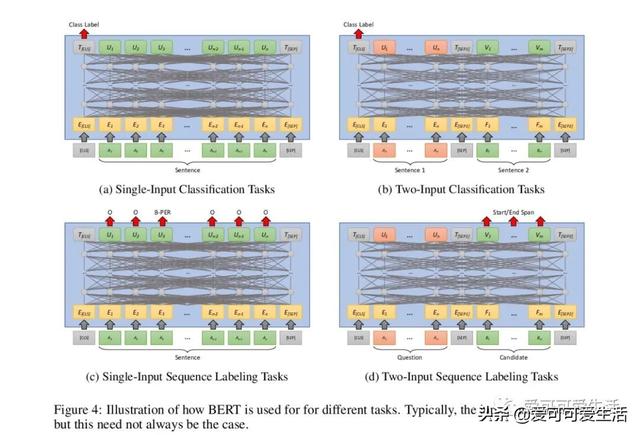

The goal of text ranking is to generate an ordered list of texts retrieved from a corpus in response to a query. Although the most common formulation of text ranking is search, instances of the task can also be found in many natural language processing applications. This survey provides an overview of text ranking with neural network architectures known as transformers, of which BERT is the best-known example. The combination of transformers and self-supervised pretraining has, without exaggeration, revolutionized the fields of natural language processing (NLP), information retrieval (IR), and beyond. In this survey, we provide a synthesis of existing work as a single point of entry for practitioners who wish to gain a better understanding of how to apply transformers to text ranking problems and researchers who wish to pursue work in this area. We cover a wide range of modern techniques, grouped into two high-level categories: transformer models that perform reranking in multi-stage ranking architectures and learned dense representations that attempt to perform ranking directly. There are two themes that pervade our survey: techniques for handling long documents, beyond the typical sentence-by-sentence processing approaches used in NLP, and techniques for addressing the tradeoff between effectiveness (result quality) and efficiency (query latency). Although transformer architectures and pretraining techniques are recent innovations, many aspects of how they are applied to text ranking are relatively well understood and represent mature techniques. However, there remain many open research questions, and thus in addition to laying out the foundations of pretrained transformers for text ranking, this survey also attempts to prognosticate where the field is heading.

文章插图

文章插图

文章插图

文章插图

文章插图

文章插图

推荐阅读

- 谷歌:想发AI论文?请保证写的是正能量

- 谷歌对内部论文进行“敏感问题”审查!讲坏话的不许发

- 2019年度中国高质量国际科技论文数排名世界第二

- 谷歌通过启动敏感话题审查来加强对旗下科学家论文的控制

- Arxiv网络科学论文摘要11篇(2020-10-12)

- 聚焦城市治理新方向,5G+智慧城市推介会在长举行

- 中国移动5G新型智慧城市全国推介会在长沙举行

- 年年都考的数字鸿沟有了新进展?彭波老师的论文给出了解答!

- 打开深度学习黑箱,牛津大学博士小姐姐分享134页毕业论文

- 爱可可AI论文推介(10月9日)

![[他人婚]被曝插足他人婚姻 《青你2》选手申冰退赛](https://img3.utuku.china.com/550x0/toutiao/20200326/5961a705-f613-40cd-b825-bc7656e59cfc.jpg)