爱可可AI论文推介(10月17日)( 三 )

文章插图

文章插图

文章插图

文章插图

文章插图

文章插图

5、[LG] Neograd: gradient descent with an adaptive learning rate

【爱可可AI论文推介(10月17日)】M F. Zimmer

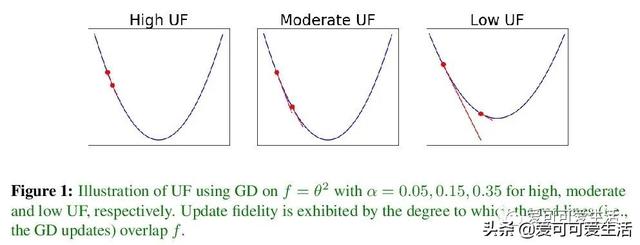

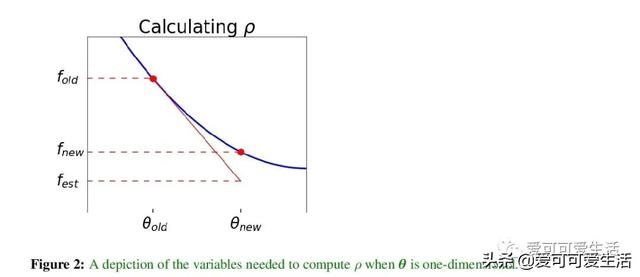

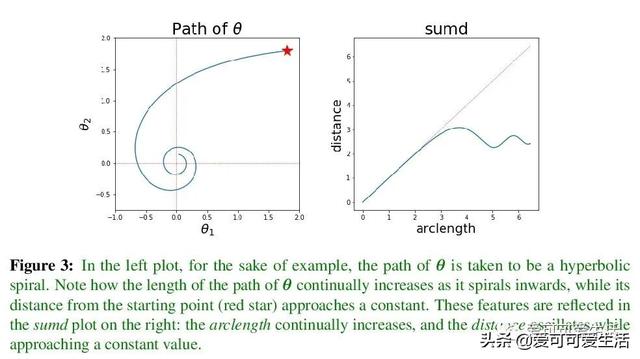

具有自适应学习率的梯度下降算法族Neograd , 用基于更新错误率指标ρ的公式化估计 , 可在训练的每一步动态调整学习速率 , 而不必试运行以估计整个优化过程的单一学习速率 , 增加的额外成本微不足道 。 该算法族成员NeogradM , 可迅速达到比其他一阶算法低得多的代价函数值 , 性能有很大提高 。

Since its inception by Cauchy in 1847, the gradient descent algorithm has been without guidance as to how to efficiently set the learning rate. This paper identifies a concept, defines metrics, and introduces algorithms to provide such guidance. The result is a family of algorithms (Neograd) based on a constant ρ ansatz, where ρ is a metric based on the error of the updates. This allows one to adjust the learning rate at each step, using a formulaic estimate based on ρ. It is now no longer necessary to do trial runs beforehand to estimate a single learning rate for an entire optimization run. The additional costs to operate this metric are trivial. One member of this family of algorithms, NeogradM, can quickly reach much lower cost function values than other first order algorithms. Comparisons are made mainly between NeogradM and Adam on an array of test functions and on a neural network model for identifying hand-written digits. The results show great performance improvements with NeogradM.

文章插图

文章插图

文章插图

文章插图

文章插图

文章插图

推荐阅读

- 谷歌:想发AI论文?请保证写的是正能量

- 谷歌对内部论文进行“敏感问题”审查!讲坏话的不许发

- 2019年度中国高质量国际科技论文数排名世界第二

- 谷歌通过启动敏感话题审查来加强对旗下科学家论文的控制

- Arxiv网络科学论文摘要11篇(2020-10-12)

- 聚焦城市治理新方向,5G+智慧城市推介会在长举行

- 中国移动5G新型智慧城市全国推介会在长沙举行

- 年年都考的数字鸿沟有了新进展?彭波老师的论文给出了解答!

- 打开深度学习黑箱,牛津大学博士小姐姐分享134页毕业论文

- 爱可可AI论文推介(10月9日)

![[青鸣体育]是目前NBA里中距离最准的球星,卡哇伊莱昂纳德的中投到底有多准?](https://imgcdn.toutiaoyule.com/20200323/20200323072039113605a_t.jpeg)

![[耶叔看世界]大部分没有屋顶,被巨石压了数百年,世界上最有压力村子](https://imgcdn.toutiaoyule.com/20200412/20200412074149393124a_t.jpeg)

![[陶哲轩]8岁男孩智商超过爱因斯坦,高考成绩高达760分,如今发展怎样了?](http://img88.010lm.com/img.php?https://image.uc.cn/s/wemedia/s/upload/2020/2ea4cc39af39805900dffbbe8a562466.jpg)