爱可可AI论文推介(10月9日)( 二 )

文章插图

文章插图

文章插图

文章插图

3、[AI]Human-Level Performance in No-Press Diplomacy via Equilibrium Search

J Gray, A Lerer, A Bakhtin, N Brown

【爱可可AI论文推介(10月9日)】[Facebook AI Research]

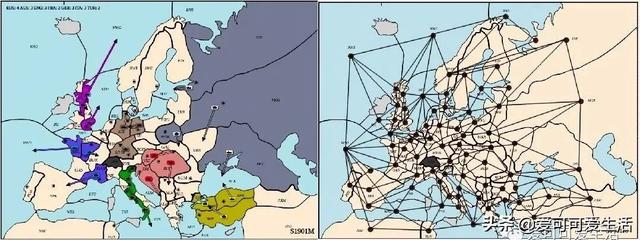

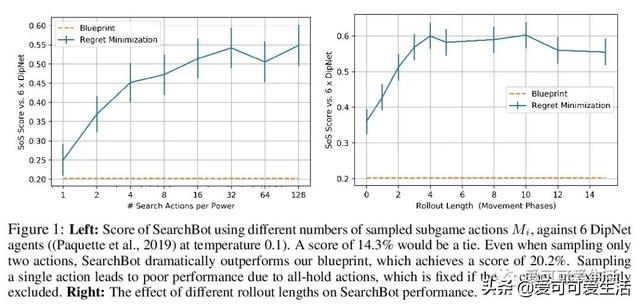

用均衡搜索玩经典棋类桌游《外交风云》达到人类水平 , 《外交风云》是个复杂游戏 , 涉及合作与竞争 , 对AI技术提出了重大的理论和实践挑战 。 新的AI智能体通过对人类数据的监督学习并使用外部遗憾最小化的单步前向搜索 , 在该游戏中实现了人类水平性能 。 用该智能体在流行的《外交风云》网站上匿名玩游戏 , 在1128名人类玩家中排名第23 。

Prior AI breakthroughs in complex games have focused on either the purely adversarial or purely cooperative settings. In contrast, Diplomacy is a game of shifting alliances that involves both cooperation and competition. For this reason, Diplomacy has proven to be a formidable research challenge. In this paper we describe an agent for the no-press variant of Diplomacy that combines supervised learning on human data with one-step lookahead search via external regret minimization. External regret minimization techniques have been behind previous AI successes in adversarial games, most notably poker, but have not previously been shown to be successful in large-scale games involving cooperation. We show that our agent greatly exceeds the performance of past no-press Diplomacy bots, is unexploitable by expert humans, and achieves a rank of 23 out of 1,128 human players when playing anonymous games on a popular Diplomacy website.

文章插图

文章插图

文章插图

文章插图

4、[CL]CATBERT: Context-Aware Tiny BERT for Detecting Social Engineering Emails

Y Lee, J Saxe, R Harang

[Sophos AI]

用上下文感知的超小BERT检测钓鱼邮件 , 为了识别那些不包含恶意代码、不与已知攻击共享单词选择的手工社会工程邮件 , 通过微调一个预训练的、大规模修剪的BERT模型 , 加上来自邮件头的附加上下文特征 , 从中学习邮件内容和上下文特性间的上下文表示 , 即使存在故意拼写错误和恶意逃避的状况 , 也能有效检测出有针对性的钓鱼邮件 。 该方法优于用适配器和上下文层的强基线模型 , 钓鱼邮件检出率达到87% 。

Targeted phishing emails are on the rise and facilitate the theft of billions of dollars from organizations a year. While malicious signals from attached files or malicious URLs in emails can be detected by conventional malware signatures or machine learning technologies, it is challenging to identify hand-crafted social engineering emails which don't contain any malicious code and don't share word choices with known attacks. To tackle this problem, we fine-tune a pre-trained BERT model by replacing the half of Transformer blocks with simple adapters to efficiently learn sophisticated representations of the syntax and semantics of the natural language. Our Context-Aware network also learns the context representations between email's content and context features from email headers. Our CatBERT(Context-Aware Tiny Bert) achieves a 87% detection rate as compared to DistilBERT, LSTM, and logistic regression baselines which achieve 83%, 79%, and 54% detection rates at false positive rates of 1%, respectively. Our model is also faster than competing transformer approaches and is resilient to adversarial attacks which deliberately replace keywords with typos or synonyms.

推荐阅读

- 谷歌:想发AI论文?请保证写的是正能量

- 谷歌对内部论文进行“敏感问题”审查!讲坏话的不许发

- 2019年度中国高质量国际科技论文数排名世界第二

- 谷歌通过启动敏感话题审查来加强对旗下科学家论文的控制

- Arxiv网络科学论文摘要11篇(2020-10-12)

- 聚焦城市治理新方向,5G+智慧城市推介会在长举行

- 中国移动5G新型智慧城市全国推介会在长沙举行

- 年年都考的数字鸿沟有了新进展?彭波老师的论文给出了解答!

- 打开深度学习黑箱,牛津大学博士小姐姐分享134页毕业论文

- 兰州科技大市场牵线搭台,6项兰州大学科技成果在兰推介